Despite low yields caused by a significant yet easy-to-fix design issue, Nvidia will produce around 450,000 AI GPUs based on the Blackwell architecture, believe analysts from Morgan Stanley. This could translate into revenue opportunity of over $10 billion, if the information is accurate and the company manages to sell these units this calendar year.

“Blackwell chips are expected to see 450,000 units produced in the fourth quarter of 2024, translating into a potential revenue opportunity exceeding $10 billion for Nvidia,” analysts from investment bank Morgan Stanley wrote in a note to clients, reports The_AI_Investor, a blogger that tends to have access to this kind of notes.

While the $10 billion and 450,000 numbers looks significant, they mean that Nvidia will sell its high-demand Blackwell GPUs in short supply for around $22,000 per unit, down significantly from the rumored $70,000 per Blackwell GPU module. Although actual pricing of data center hardware depends on factors like volumes and demand, selling the very first batches of ultra-high-end GPUs at prices that are lower compared to prices of Nvidia’s current-generation H100 is a bit odd.

Nvidia would of course prefer to sell ‘reference’ AI server cabinets powered by its Blackwell processors: the NVL36 featuring 36 B200 GPUs, which is expected to cost $1.8 million – $2 million, and the NVL72, with 72 B200 GPUs inside, which is expected to start at $3 million. Selling cabinets instead of GPU, GPU modules, or even DGX and HGX servers is of course much more profitable and earning $10 billion selling such machines is not something unexpected. However, in this case Nvidia will not need to supply 450,000 of GPUs to earn $10 billion in revenue.

Back in late August Nvidia said that it had to make a change in the codenamed B100/B200 GPU photomask design to improve production yield. The company also said that the processors enter mass production in the fourth quarter and continue into fiscal 2026. In the fourth quarter of fiscal 2025 (which begins in late October for the company), Nvidia expected to ship ‘several billion dollars in Blackwell revenue,’ which is significantly lower than the sum mentioned by Morgan Stanley analysts.

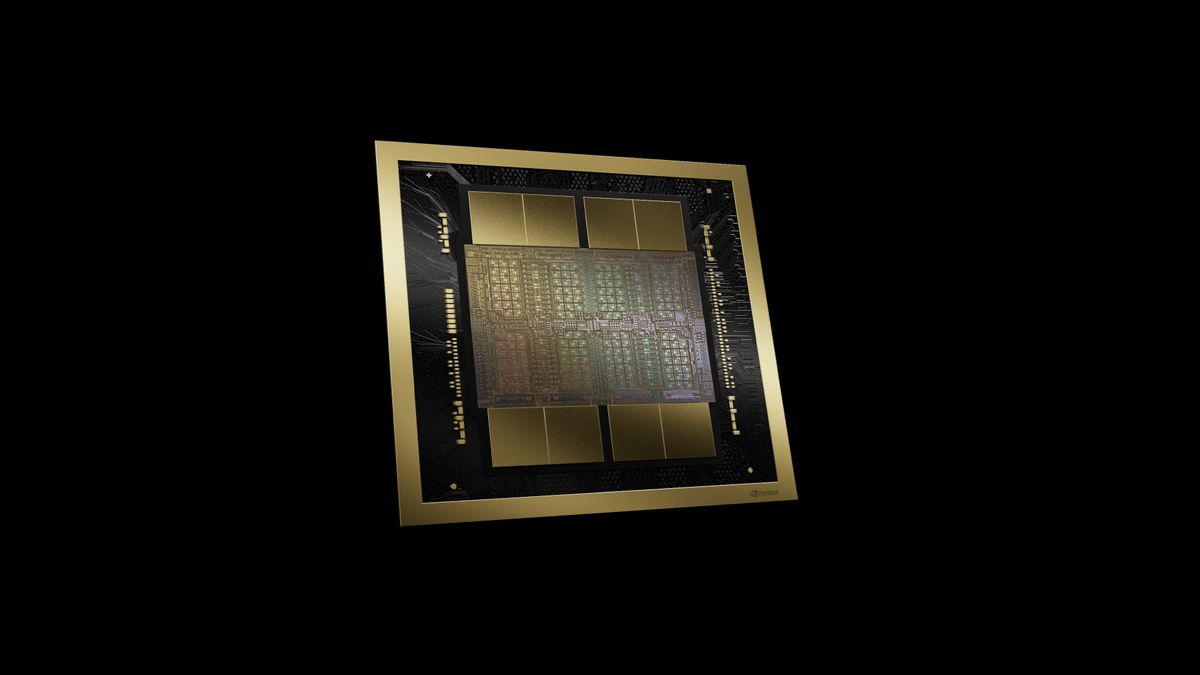

According to a SemiAnalysis report, a mismatch in coefficient of thermal expansion (CTE) of Blackwell chiplets and packaging materials caused failure. Nvidia’s B100 and B200 GPUs are the first to use TSMC’s CoWoS-L packaging, which links chiplets using passive local silicon interconnect (LSI) bridges integrated into a redistribution layer (RDL) interposer. Precise placement of these bridges is crucial, but a mismatch in CTE between the chiplets, bridges, organic interposer, and substrate caused warping and system failure. Nvidia reportedly had to redesign the GPU’s top metal layers to improve yields but did not disclose details, only mentioning new masks. The company clarified that no functional changes were made to the Blackwell silicon, focusing solely on yield improvements.

Due to the necessity to change design of B100 and B200 GPUs as well as shortage of TSMC’s CoWoS-L (which is vastly different method from the proven CoWoS-S technology) packaging capacity, analysts did not expect Nvidia to ship many Blackwell-based processors in the fourth quarter of calendar 2024 or the fourth quarter of the company’s fiscal 2025.