Passively Purloining Private Packets

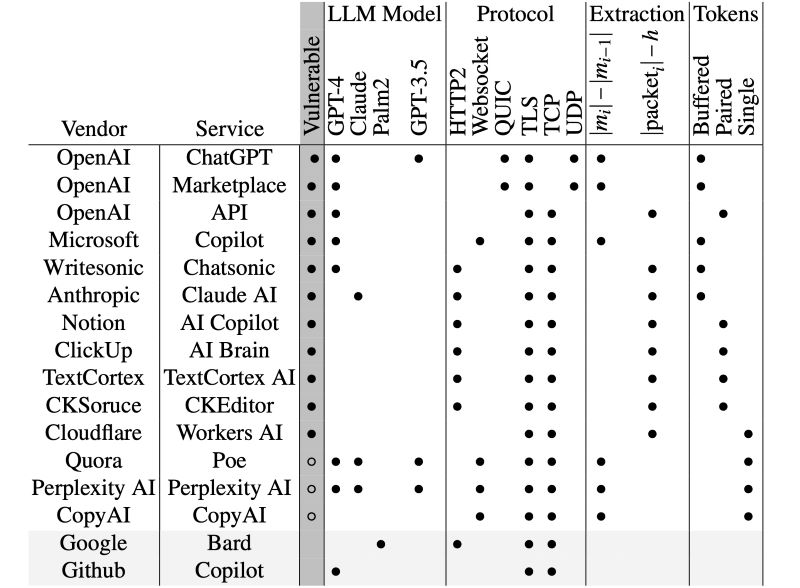

AI assistants have been in the news lately, and not in a way that the designers hoped. If the Morris 2 self replicating AI worm wasn’t enough to make you question their use, perhaps realizing that people can read the supposedly encrypted responses to your queries might give you pause. The man in the middle attack is ridiculously easy to accomplish and is both relatively effective and completely undetectable. The queries you send can be observed by anyone on the same network that you communicate with the AI assistant on, it doesn’t require any malware to be installed or credentials to be acquired or faked. The problem is that the encryption used is flawed, and an LLM can be trained to decrypt the AI assistants responses to your questions; Google’s Gemini is the only exception.

The researchers who discovered the flaw were able to determine the topic you are asking about over 50% of the time and could extract the entirety of the message 29% of the time. Since the attack only requires someone to observe your traffic, there is no way to know if your queries are being eavesdropped on. To make things even worse, the LLM trained to decrypt the traffic will probably only get more accurate as it gets more training data.

Ars Technica delves into the specifics of the attacks, or you could ask your AI assistant if you dare.